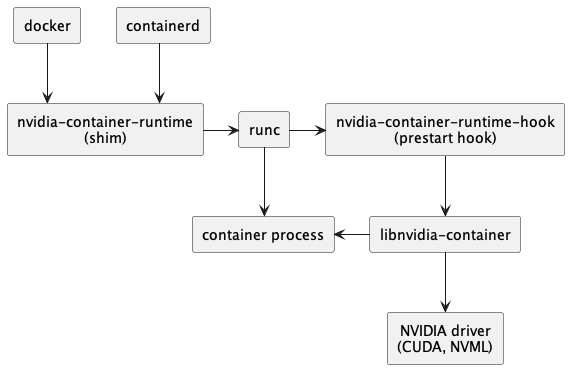

上一篇写了Docker特权模式取消改造 → Docker特权权限与安全性实践,这篇主要写 Nvidia GPU 在 Docker 容器中使用及调用验证。首先需要了解下 Nvidia Container Runtime 架构,即GPU是怎么在容器内调用使用的。

NVIDIA Container Runtime 架构:基本原理是用钩子把宿主机显卡驱动映射到容器内使用。

The NVIDIA Container Runtime Hook

This component is included in the nvidia-container-toolkit package.

This component includes an executable that implements the interface required by a runC prestart hook. This script is invoked by runC after a container has been created, but before it has been started, and is given access to the config.json associated with the container (e.g. this config.json ). It then takes information contained in the config.json and uses it to invoke the nvidia-container-cli CLI with an appropriate set of flags. One of the most important flags being which specific GPU devices should be injected into the container.

GPU Docker容器运行环境配置:Specialized Configurations with Docker(官方配置参考)

需要Docker容器支持GPU,首先需要在容器中构建Nvidia Container Runtime 支持环境,截至 2024/04/08 ,nvidia-container-runtime 已经废弃了,现在叫 nvidia-container-toolkit 并且官方文档切到下面:

具体安装可以参考:一文教你如何在容器中使用GPU,这里就不介绍了,直接配置 runtime:

# nvidia docker runtimes配置

anzhihe@anzhihe-ubuntu2:~$ cat /etc/docker/daemon.json

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

}

}

# 容器内cuda版本

anzhihe@anzhihe-ubuntu2:~$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Wed_Oct_23_21:14:42_PDT_2019

Cuda compilation tools, release 10.2, V10.2.89

# 容器内nvidia设备挂载正常

root@anzhihe-ubuntu2:/# ll /dev|egrep -i "nvmap|nvhost*"

crw-rw---- 1 root video 506, 1 Jan 28 2018 nvhost-as-gpu

crw-rw---- 1 root video 242, 0 Jan 28 2018 nvhost-ctrl

crw-rw---- 1 root video 506, 2 Jan 28 2018 nvhost-ctrl-gpu

crw-rw---- 1 root video 242, 62 Jan 28 2018 nvhost-ctrl-isp

crw-rw---- 1 root video 242, 34 Jan 28 2018 nvhost-ctrl-nvcsi

crw-rw---- 1 root video 242, 10 Jan 28 2018 nvhost-ctrl-nvdec

crw-rw---- 1 root video 242, 14 Jan 28 2018 nvhost-ctrl-nvdec1

crw-rw---- 1 root video 242, 54 Jan 28 2018 nvhost-ctrl-nvdla0

crw-rw---- 1 root video 242, 58 Jan 28 2018 nvhost-ctrl-nvdla1

crw-rw---- 1 root video 242, 46 Jan 28 2018 nvhost-ctrl-pva0

crw-rw---- 1 root video 242, 50 Jan 28 2018 nvhost-ctrl-pva1

crw-rw---- 1 root video 506, 6 Jan 28 2018 nvhost-ctxsw-gpu

crw-rw---- 1 root root 506, 3 Jan 28 2018 nvhost-dbg-gpu

crw-rw---- 1 root video 506, 0 Jan 28 2018 nvhost-gpu

crw-rw---- 1 root video 242, 61 Jan 28 2018 nvhost-isp

crw-rw---- 1 root video 242, 41 Jan 28 2018 nvhost-isp-thi

crw-rw---- 1 root video 242, 25 Jan 28 2018 nvhost-msenc

crw-rw---- 1 root video 242, 33 Jan 28 2018 nvhost-nvcsi

crw-rw---- 1 root video 242, 9 Jan 28 2018 nvhost-nvdec

crw-rw---- 1 root video 242, 13 Jan 28 2018 nvhost-nvdec1

crw-rw---- 1 root video 242, 53 Jan 28 2018 nvhost-nvdla0

crw-rw---- 1 root video 242, 57 Jan 28 2018 nvhost-nvdla1

crw-rw---- 1 root video 242, 29 Jan 28 2018 nvhost-nvenc1

crw-rw---- 1 root video 242, 21 Jan 28 2018 nvhost-nvjpg

crw-rw---- 1 root root 506, 4 Jan 28 2018 nvhost-prof-gpu

crw-rw---- 1 root video 242, 45 Jan 28 2018 nvhost-pva0

crw-rw---- 1 root video 242, 49 Jan 28 2018 nvhost-pva1

crw-rw---- 1 root video 506, 7 Jan 28 2018 nvhost-sched-gpu

crw-rw---- 1 root video 242, 1 Jan 28 2018 nvhost-tsec

crw-rw---- 1 root video 242, 5 Jan 28 2018 nvhost-tsecb

crw-rw---- 1 root video 506, 5 Jan 28 2018 nvhost-tsg-gpu

crw-rw---- 1 root video 242, 65 Jan 28 2018 nvhost-vi

crw-rw---- 1 root video 242, 37 Jan 28 2018 nvhost-vi-thi

crw-rw---- 1 root video 242, 17 Jan 28 2018 nvhost-vic

crw-rw---- 1 root video 10, 61 Jan 28 2018 nvmap在容器内编译ROS的测试脚本,验证GPU是否能正常调用,测试脚本如下:

#include <iostream>

#include <cuda_runtime.h>

// CUDA核函数:将两个向量相加

__global__ void vectorAdd(int *a, int *b, int *c, int n) {

int index = blockIdx.x * blockDim.x + threadIdx.x;

if (index < n) {

c[index] = a[index] + b[index];

}

}

int main() {

int n = 500;

int a[n], b[n], c[n];

int *dev_a, *dev_b, *dev_c;

// 分配内存并拷贝数据到设备

cudaMalloc((void**)&dev_a, n * sizeof(int));

cudaMalloc((void**)&dev_b, n * sizeof(int));

cudaMalloc((void**)&dev_c, n * sizeof(int));

cudaMemcpy(dev_a, a, n * sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(dev_b, b, n * sizeof(int), cudaMemcpyHostToDevice);

// 调用CUDA核函数

vectorAdd<<<1, n>>>(dev_a, dev_b, dev_c, n);

// 拷贝结果回主机

cudaMemcpy(c, dev_c, n * sizeof(int), cudaMemcpyDeviceToHost);

// 打印结果

for (int i = 0; i < n; i++) {

std::cout << c[i] << " ";

}

std::cout << std::endl;

// 释放内存

cudaFree(dev_a);

cudaFree(dev_b);

cudaFree(dev_c);

return 0;

}

# CMakeLists.txt

cmake_minimum_required(VERSION 2.8.3)

project(my_ros_package)

set(CMAKE_CUDA_COMPILER /usr/local/cuda/bin/nvcc)

## Compile as C++11, supported in ROS Kinetic and newer

# add_compile_options(-std=c++11)

# 设置CUDA编译标志

SET(CUDA_NVCC_FLAGS "${CUDA_NVCC_FLAGS} -DMY_DEF=1")

# 设置C++编译标志

SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -O3")

## Find catkin macros and libraries

## if COMPONENTS list like find_package(catkin REQUIRED COMPONENTS xyz)

## is used, also find other catkin packages

find_package(catkin REQUIRED COMPONENTS

roscpp

)

find_package(CUDA REQUIRED)

catkin_package()

include_directories(

${catkin_INCLUDE_DIRS}

${CUDA_INCLUDE_DIRS}

)

cuda_add_executable(cuda_test_cu src/cuda_test.cu)

target_link_libraries(cuda_test_cu ${catkin_LIBRARIES} ${CUDA_LIBRARIES})

# CMake索引

FILE(GLOB_RECURSE LibFiles "include/*")

add_custom_target(headers SOURCES ${LibFiles})编译执行过程,ROS编译参考 → ROS工作空间与文件系统:

anzhihe@anzhihe-ubuntu2:~$ source /opt/ros/melodic/setup.bash anzhihe@anzhihe-ubuntu2:~$ mkdir -p ~/catkin_ws/src anzhihe@anzhihe-ubuntu2:~$ cd ~/catkin_ws/src anzhihe@anzhihe-ubuntu2:~/catkin_ws/src$ catkin_init_workspace Creating symlink "/home/anzhihe/catkin_ws/src/CMakeLists.txt" pointing to "/opt/ros/melodic/share/catkin/cmake/toplevel.cmake" anzhihe@anzhihe-ubuntu2:~/catkin_ws/src$ cd ~/catkin_ws/ anzhihe@anzhihe-ubuntu2:~/catkin_ws$ cd ~/catkin_ws/src anzhihe@anzhihe-ubuntu2:~/catkin_ws/src$ catkin_create_pkg my_ros_package roscpp Created file my_ros_package/package.xml Created file my_ros_package/CMakeLists.txt Created folder my_ros_package/include/my_ros_package Created folder my_ros_package/src Successfully created files in /home/anzhihe/catkin_ws/src/my_ros_package. Please adjust the values in package.xml. anzhihe@anzhihe-ubuntu2:~/catkin_ws/src$ cd my_ros_package/ anzhihe@anzhihe-ubuntu2:~/catkin_ws/src/my_ros_package$ ls CMakeLists.txt include package.xml src anzhihe@anzhihe-ubuntu2:~/catkin_ws/src/my_ros_package$ vim CMakeLists.txt anzhihe@anzhihe-ubuntu2:~/catkin_ws/src/my_ros_package$ cd src/ anzhihe@anzhihe-ubuntu2:~/catkin_ws/src/my_ros_package/src$ vim cuda_test.cu anzhihe@anzhihe-ubuntu2:~/catkin_ws/src/my_ros_package/src$ cd ~/catkin_ws anzhihe@anzhihe-ubuntu2:~/catkin_ws$ catkin_make Base path: /home/anzhihe/catkin_ws Source space: /home/anzhihe/catkin_ws/src Build space: /home/anzhihe/catkin_ws/build Devel space: /home/anzhihe/catkin_ws/devel Install space: /home/anzhihe/catkin_ws/install #### #### Running command: "cmake /home/anzhihe/catkin_ws/src -DCATKIN_DEVEL_PREFIX=/home/anzhihe/catkin_ws/devel -DCMAKE_INSTALL_PREFIX=/home/anzhihe/catkin_ws/install -G Unix Makefiles" in "/home/anzhihe/catkin_ws/build" #### -- The C compiler identification is GNU 7.5.0 -- The CXX compiler identification is GNU 7.5.0 -- Detecting C compiler ABI info -- Detecting C compiler ABI info - done -- Check for working C compiler: /usr/bin/cc - skipped -- Detecting C compile features -- Detecting C compile features - done -- Detecting CXX compiler ABI info -- Detecting CXX compiler ABI info - done -- Check for working CXX compiler: /usr/bin/c++ - skipped -- Detecting CXX compile features -- Detecting CXX compile features - done -- Using CATKIN_DEVEL_PREFIX: /home/anzhihe/catkin_ws/devel -- Using CMAKE_PREFIX_PATH: /opt/ros/melodic -- This workspace overlays: /opt/ros/melodic -- Found PythonInterp: /usr/bin/python2 (found suitable version "2.7.17", minimum required is "2") -- Using PYTHON_EXECUTABLE: /usr/bin/python2 -- Using Debian Python package layout -- Using empy: /usr/bin/empy -- Using CATKIN_ENABLE_TESTING: ON -- Call enable_testing() -- Using CATKIN_TEST_RESULTS_DIR: /home/anzhihe/catkin_ws/build/test_results -- Found gtest sources under '/usr/src/googletest': gtests will be built -- Found gmock sources under '/usr/src/googletest': gmock will be built CMake Deprecation Warning at /usr/src/googletest/CMakeLists.txt:1 (cmake_minimum_required): Compatibility with CMake < 2.8.12 will be removed from a future version of CMake. Update the VERSION argument <min> value or use a ...<max> suffix to tell CMake that the project does not need compatibility with older versions. CMake Deprecation Warning at /usr/src/googletest/googlemock/CMakeLists.txt:41 (cmake_minimum_required): Compatibility with CMake < 2.8.12 will be removed from a future version of CMake. Update the VERSION argument <min> value or use a ...<max> suffix to tell CMake that the project does not need compatibility with older versions. CMake Deprecation Warning at /usr/src/googletest/googletest/CMakeLists.txt:48 (cmake_minimum_required): Compatibility with CMake < 2.8.12 will be removed from a future version of CMake. Update the VERSION argument <min> value or use a ...<max> suffix to tell CMake that the project does not need compatibility with older versions. -- Found PythonInterp: /usr/bin/python2 (found version "2.7.17") -- Looking for pthread.h -- Looking for pthread.h - found -- Performing Test CMAKE_HAVE_LIBC_PTHREAD -- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Failed -- Looking for pthread_create in pthreads -- Looking for pthread_create in pthreads - not found -- Looking for pthread_create in pthread -- Looking for pthread_create in pthread - found -- Found Threads: TRUE -- Using Python nosetests: /usr/bin/nosetests-2.7 -- catkin 0.7.29 -- BUILD_SHARED_LIBS is on -- BUILD_SHARED_LIBS is on -- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ -- ~~ traversing 1 packages in topological order: -- ~~ - my_ros_package -- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ -- +++ processing catkin package: 'my_ros_package' -- ==> add_subdirectory(my_ros_package) CMake Deprecation Warning at my_ros_package/CMakeLists.txt:1 (cmake_minimum_required): Compatibility with CMake < 2.8.12 will be removed from a future version of CMake. Update the VERSION argument <min> value or use a ...<max> suffix to tell CMake that the project does not need compatibility with older versions. -- Found CUDA: /usr/local/cuda (found version "10.2") -- Configuring done -- Generating done -- Build files have been written to: /home/anzhihe/catkin_ws/build #### #### Running command: "make -j8 -l8" in "/home/anzhihe/catkin_ws/build" #### [ 50%] Building NVCC (Device) object my_ros_package/CMakeFiles/cuda_test_cu.dir/src/cuda_test_cu_generated_cuda_test.cu.o [100%] Linking CXX executable /home/anzhihe/catkin_ws/devel/lib/my_ros_package/cuda_test_cu [100%] Built target cuda_test_cu anzhihe@anzhihe-ubuntu2:~/catkin_ws$ /home/anzhihe/catkin_ws/devel/lib/my_ros_package/cuda_test_cu 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 77824 0 76644 0 76644 0 0 0 5 0 139264 0 147456 0 143744 0 144440 0 73728 0 3 0 0 0 688128 0 684840 0 684840 0 0 0 5 0 -810730320 127 -1558395524 127 -810730320 127 -1558395604 127 -1558278144 127 -1558245004 127 -810730160 127 -1558416452 127 0 0 3 0 -1558253568 127 0 0 -810730160 127 -1558414420 127 0 0 3 0 -1558251600 127 0 0 -1558245000 127 1815047265 47 1667719785 779312995 825126771 1869819392 -1560268498 127 0 0 -1558245000 127 1815047265 47 -810729776 127 -1558406048 127 -1560284846 127 0 0 -1558266112 127 -810729576 127 0 0 -1558249472 127 -1558253568 127 1 0 0 0 0 0 0 0 0 0 -1558253568 127 -810729568 127 -1562893256 127 -810730464 127 3 127 -1562893256 127 144440 0 -810730208 127 3 127 -1558406048 127 0 0 0 0 6 0 1181056 0 -1558262112 127 -1560284846 127 66316 0 1181056 0 33188 1 0 0 0 0 0 0 -810729824 127 -1558406348 127 -1563030277 127 0 0 0 0 1583843394 0 -810728784 127 -1558389528 127 -810727080 127 -1562894904 127 0 0 -1558278144 127 0 0 -810728256 127 -1563032200 127 -1558389584 127 1313426501 1196312907 -1558266112 127 0 0 -810728256 127 -1560551520 127 -1558389584 127 1313426501 1196312907 -1558245376 127 -1558330976 127 -810728256 127 -810729593 127 -810729592 127 -810729576 127 -1558244944 127 14 0 -1558262112 127 -1558253568 127 -810729544 0 -810729528 127 -1558262112 127 -810725008 127 832 0 1179403647 65794 0 0 11993091 1 10512 0 64 0 78504 0 0 3670080 4194311 1638426 1 5 0 0

可以看到输出正常结果,说明在容器中能正常调用 Nvidai GPU功能。

在后续容器中测试相机等硬件调用时,一直报权限错误,最后发现容器配置中挂载的是数据卷,而不是设备,它们的作用和用法是有所不同的。

docker -v 和 --device 是 Docker 命令中的两个不同参数,它们的作用和用法有所不同:

-v 参数:-v 参数用于在容器内部创建一个数据卷(volume),将主机上的目录或文件与容器内部的目录进行映射。这样可以实现主机和容器之间的数据共享和持久化存储。例如:

docker run -v /host/path:/container/path -it <image_name>

这个命令会将主机上的 /host/path 目录映射到容器内的 /container/path 目录。

--device 参数:--device 参数用于将主机上的设备映射到容器内部,允许容器访问主机上的设备。这在需要容器访问特定设备时非常有用。例如:

docker run --device=/dev/sda:/dev/xvda -it <image_name>

这个命令会将主机上的 /dev/sda 设备映射到容器内的 /dev/xvda 设备。

总的来说,-v 参数用于目录和文件的映射,而 --device 参数用于设备的映射。根据具体需求选择合适的参数来配置 Docker 容器。

最终Docker启动环境compose.yaml文件修改如下:

version: '3.9' services: default: image: xxxx stdin_open: true restart: always network_mode: host # privileged: true cpuset: "0-7" # 绑定CPU cap_add: - SYS_NICE # 修改进程优先级权限 - NET_ADMIN # 修改系统网络权限 - CAP_SYSLOG # 获取系统内核日志(如dmesg使用) runtime: nvidia # nvidia-container-runtime,基本原理是用钩子把宿主机显卡驱动映射到容器内 devices: - /dev/:/dev/ # 挂载主机上的设备到容器内调用,建设设备按需挂载,而不是直接挂载/dev下所有设备 # - /dev/video3:/dev/video3 volumes: - /dev:/dev # 挂载主机数据卷 - /data/autocar:/home/anzhihe/data - /proc/sys/vm:/host_proc/sys/vm # 修改系统内核参数 - /sys/fs/cgroup:/sys/fs/cgroup # 使用系统cgroup权限 environment: NVIDIA_VISIBLE_DEVICES: all # 指定nvidia runtime NVIDIA_DRIVER_CAPABILITIES: all # 为容器指定其需要的GPU能力,默认为utility deploy: resources: reservations: devices: - driver: nvidia capabilities: - gpu # 在docker能访问主机上的GPU,默认可访问所有GPUs - utility # 在docker中使用nvidia-smi 和 NVML - compute # 在docker中使用CUDA和OpenCL

参考:

Nvidia Container Runtime 就像是一个桥梁 让 Docker 容器能够使用电脑里的图形加速器(GPU) 让计算任务跑得更快

@刘郎