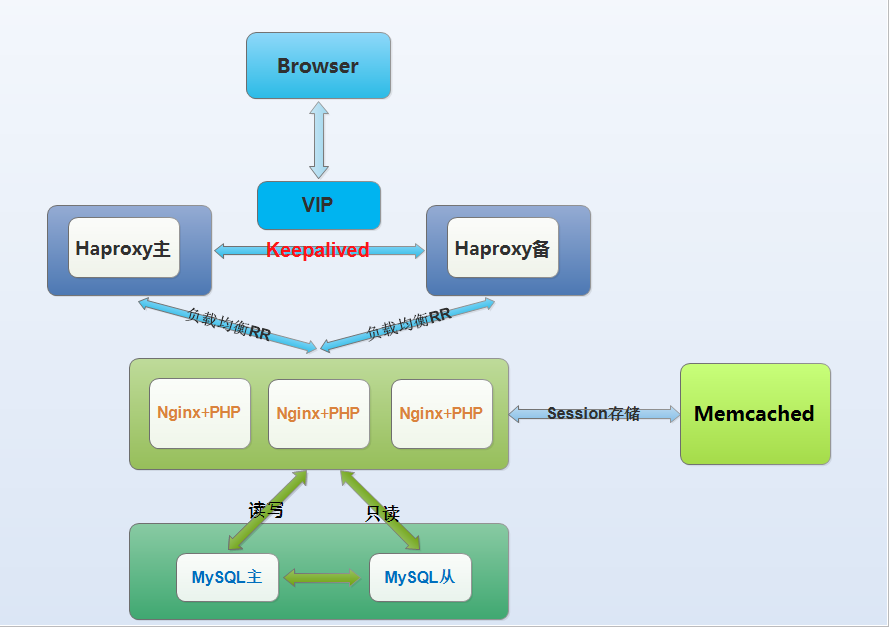

SaltStack一键部署Haproxy + Keepalived

1. 基础包安装配置

[root@linux-node1 /srv/salt/prod]# mkdir {cluster,modules}

[root@linux-node1 /srv/salt/prod]# cd modules/

[root@linux-node1 /srv/salt/prod]# mkdir {haproxy,keepalived,memcached,nginx,php,pkg}

[root@linux-node1 /srv/salt/prod/modules]# ls

haproxy keepalived memcached nginx php pkg

[root@linux-node1 /srv/salt/prod]# tree /srv/salt/prod

/srv/salt/prod

├── cluster #集群层,业务引用

└── modules #模块管理层

├── haproxy

├── keepalived

├── memcached

├── nginx

├── php

└── pkg #基础包管理模块

[root@linux-node1 /srv/salt/prod]# cd pkg

[root@linux-node1 /srv/salt/prod/modules/pkg]# cat make.sls #基础安装包配置

make-pkg:

pkg.installed:

- pkgs:

- gcc

- gcc-c++

- glibc

- make

- autoconf

- openssl

- openssl-devel

- pcre

- pcre-devel2. 部署Haproxy

操作方法:第一次先打台机器手动安装一次,然后再用salt来编写,一次编写多次运行

[root@linux-node1 /srv/salt/prod/modules/haproxy]# cat install.sls include: - modules.pkg.make haproxy-install: file.managed: #配置文件管理 - name: /usr/local/src/haproxy-1.6.3.tar.gz - source: salt://modules/haproxy/files/haproxy-1.6.3.tar.gz - mode: 755 - user: root - group: root cmd.run: #编译安装 - name: cd /usr/local/src && tar zxf haproxy-1.6.3.tar.gz && cd haproxy-1.6.3 && make TARGET=linux2628 PREFIX=/usr/local/haproxy-1.6.3 && make install PREFIX=/usr/local/haproxy-1.6.3 && ln -s /usr/local/haproxy-1.6.3 /usr/local/haproxy - unless: test -L /usr/local/haproxy #如果unless后面的命令返回为true则不执行cmd.run命令,即此连接符号文件存在,上边命令则不执行,防止重复安装 - require: - pkg: make-pkg - file: haproxy-install haproxy-init: #启动文件管理 file.managed: - name: /etc/init.d/haproxy - source: salt://modules/haproxy/files/haproxy.init - mode: 755 - user: root - group: root - require_in: - file: haproxy-install cmd.run: #开机启动 - name: chkconfig --add haproxy - unless: chkconfig --list | grep haproxy net.ipv4.ip_nonlocal_bind: #修改内核参数,监听非本地IP,开启vip功能 sysctl.present: - value: 1 /etc/haproxy: file.directory: #管理配置文件目录 - user: root - group: root - mode: 755 [root@linux-node1 /srv/salt/prod/modules]# salt '*' state.sls modules.haproxy.install saltenv=prod #执行sls文件,安装haproxy [root@linux-node1 /srv/salt/prod/modules]# ls -l /usr/local/haproxy lrwxrwxrwx 1 root root 24 Jul 17 03:06 /usr/local/haproxy -> /usr/local/haproxy-1.6.3 [root@linux-node2 ~]# ps aux|grep yum root 3187 2.5 1.4 328920 27236 ? S 08:04 0:00 /usr/bin/python /usr/bin/yum -y install pcre-c++ openssl-devel root 3239 0.0 0.0 112648 960 pts/0 R+ 08:05 0:00 grep --color yum Summary for linux-node2.example.com ------------ Succeeded: 7 (changed=1) Failed: 0 ------------ Total states run: 7 [root@linux-node2 ~]# ls -l /usr/local/haproxy lrwxrwxrwx 1 root root 24 Jul 29 08:14 /usr/local/haproxy -> /usr/local/haproxy-1.6.3

3. Haproxy的业务引用

[root@linux-node1 ~]# cd /srv/salt/prod/cluster && mkdir files && cd files [root@linux-node1 /srv/salt/prod/cluster/files]# cat haproxy-outside.cfg #统一管理haproxy对外服务的配置文件 global #全局设置 maxconn 100000 #最大连接数 chroot /usr/local/haproxy #当前工作目录 uid 99 #运行用户的uid gid 99 #运行用户的用户组 daemon #以后台形式运行haproxy nbproc 1 #启动1个haproxy实例 pidfile /usr/local/haproxy/logs/haproxy.pid #pid文件位置 log 127.0.0.1 local3 info #日志文件的输出定向 defaults option http-keep-alive #启用请求-应答模式,持久连接,更高效 maxconn 100000 mode http #所处理的类别,默认采用http模式,可配置成tcp作4层消息转发 timeout connect 5000ms #连接超时时间 timeout client 50000ms #客户端连接超时时间 timeout server 50000ms #服务器连接超时时间 listen stats #监听运行状态 mode http bind 0.0.0.0:9999 #监听端口 stats enable #开启状态监听功能 stats uri /haproxy-status #监控页面入口地址 stats auth haproxy:saltstack #用户名密码认证设置 frontend frontend_www_example_com bind 192.168.56.21:80 #对外提供服务的vip mode http #http的7层模式 option httplog log global default_backend backend_www_example_com #frontend配置 backend backend_www_example_com option forwardfor header X-REAL-IP option httpchk HEAD / HTTP/1.0 balance roundrobin #轮询模式,改为balance source即为会话保持模式,balance leastconn最小连接模式 server web-node1 192.168.56.11:8080 check inter 2000 rise 30 fall 15 #服务器定义,serverid为web-node1,check inter 2000是检测心跳频率,rise 30是30次检测正确认为服务器可用,fall 15是15次失败认为服务器不可用,weight代表权重,可添加配置 server web-node2 192.168.56.12:8080 check inter 2000 rise 30 fall 15 [root@linux-node1 /srv/salt/prod/cluster]# cat haproxy-outside.sls #编写haproxy对外提供服务的sls文件 include: - modules.haproxy.install haproxy-service: file.managed: - name: /etc/haproxy/haproxy.cfg - source: salt://cluster/files/haproxy-outside.cfg - user: root - group: root - mode: 644 service.running: - name: haproxy - enable: True - reload: True - require: - cmd: haproxy-install - watch: - file: haproxy-service [root@linux-node1 /srv/salt/prod/cluster]# cat /srv/salt/base/top.sls #修改top file base: '*': - init.init prod: 'linux-node*': - cluster.haproxy-outside [root@linux-node1 /srv/salt/prod/cluster]# salt '*' state.highstate test=True [root@linux-node1 /srv/salt/prod/cluster]# salt '*' state.highstate #高级状态执行haproxy配置 Summary for linux-node1.example.com ------------- Succeeded: 21 (changed=4) Failed: 0 ------------- Total states run: 21 Summary for linux-node2.example.com ------------- Succeeded: 21 (changed=4) Failed: 0 ------------- Total states run: 21 [root@linux-node1 ~]# netstat -tnpl|grep haproxy tcp 0 0 0.0.0.0:9999 0.0.0.0:* LISTEN 22001/haproxy tcp 0 0 192.168.56.21:80 0.0.0.0:* LISTEN 22001/haproxy LISTEN 2595/zabbix_agentd [root@linux-node2 ~]# netstat -tnpl|grep haproxy tcp 0 0 0.0.0.0:9999 0.0.0.0:* LISTEN 22001/haproxy tcp 0 0 192.168.56.21:80 0.0.0.0:* LISTEN 22001/haproxy [root@linux-node1 /srv/salt/prod]# tree #目录结构 . ├── cluster │ ├── files │ │ └── haproxy-outside.cfg │ └── haproxy-outside.sls └── modules ├── haproxy │ ├── files │ │ ├── haproxy-1.6.3.tar.gz │ │ └── haproxy.init │ └── install.sls ├── keepalived ├── memcached ├── nginx ├── php └── pkg └── make.sls

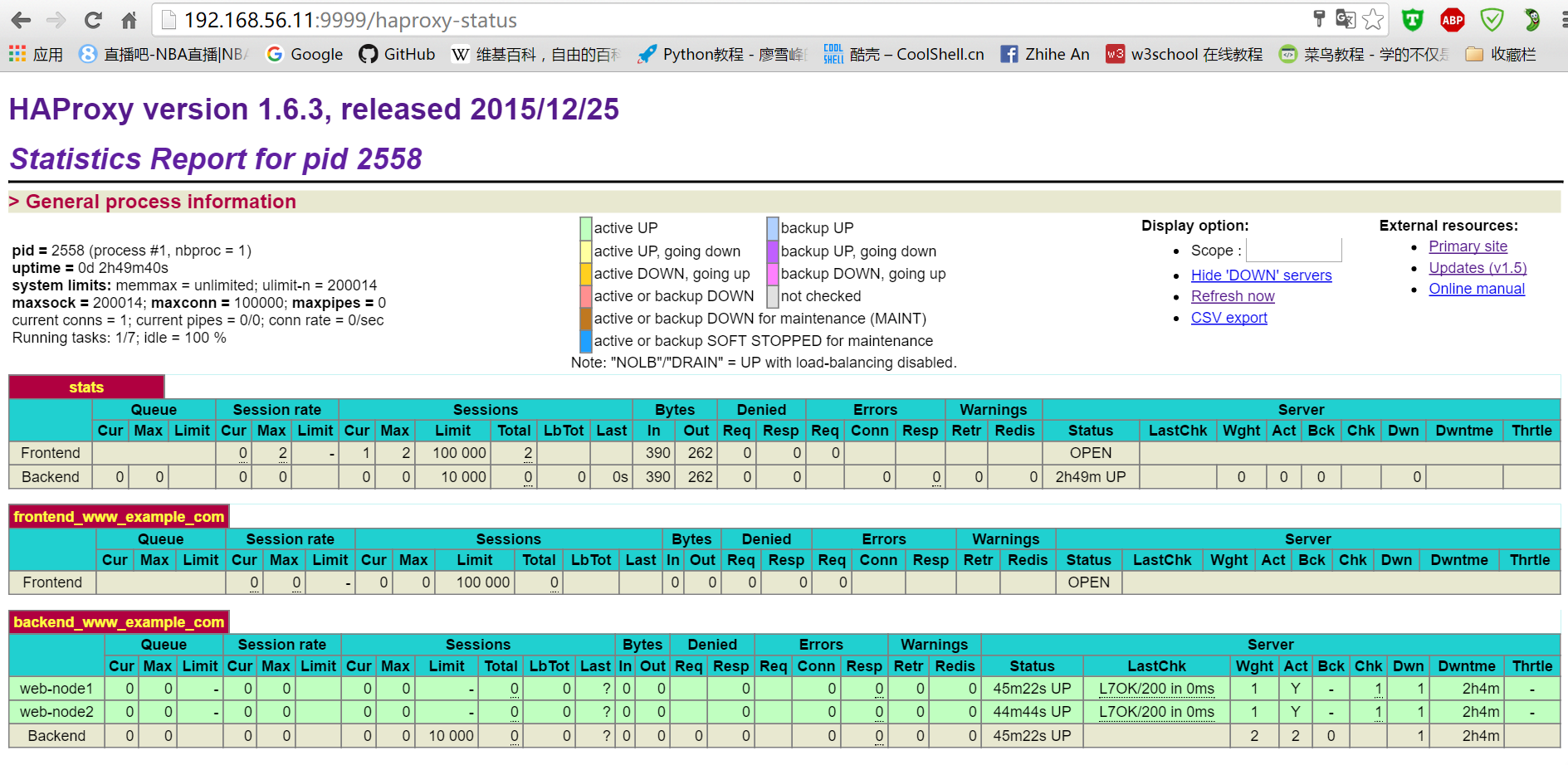

测试,访问http://192.168.56.11:9999/haproxy-status,输入用户名密码,结果如下

4. 部署keepalived

[root@linux-node1 ~]# cd /srv/salt/prod/modules/keepalived/ && mkdir files

[root@linux-node1 /srv/salt/prod/modules/keepalived/files]# ls #把keepalived的安装包和配置,启动文件拷贝过来

keepalived-1.2.23.tar.gz keepalived.init keepalived.sysconfig

[root@linux-node1 /srv/salt/prod/modules/keepalived]# cat install.sls #编辑安装配置文件

{% set keepalived_tar = 'keepalived-1.2.23.tar.gz' %} #使用jinja模版定义变量

{% set keepalived_source = 'salt://modules/keepalived/files/keepalived-1.2.23.tar.gz' %}

keepalived-install:

file.managed:

- name: /usr/local/src/{{ keepalived_tar }}

- source: {{ keepalived_source }}

- mode: 755

- user: root

- group: root

cmd.run:

- name: cd /usr/local/src && tar zxf {{ keepalived_tar }} && cd keepalived-1.2.23 && ./configure --prefix=/usr/local/keepalived --disable-fwmark && make && make install

- unless: test -d /usr/local/keepalived

- require:

- file: keepalived-install

/etc/sysconfig/keepalived:

file.managed:

- source: salt://modules/keepalived/files/keepalived.sysconfig

- mode: 644

- user: root

- group: root

/etc/init.d/keepalived:

file.managed:

- source: salt://modules/keepalived/files/keepalived.init

- mode: 755

- user: root

- group: root

keepalived-init:

cmd.run:

- name: chkconfig --add keepalived

- unless: chkconfig --list | grep keepalived

- require:

- file: /etc/init.d/keepalived

/etc/keepalived:

file.directory:

- user: root

- group: root

[root@linux-node1 /srv/salt/prod/modules/keepalived]# salt '*' state.sls modules.keepalived.install saltenv=prod #执行安装命令

[root@linux-node1 /srv/salt/prod/cluster/files]# cat haproxy-outside-keepalived.conf #keepalived的配置文件

! Configuration File for keepalived

global_defs {

notification_email {

saltstack@example.com #设置报警邮箱,可以设置多个,每行一个

}

notification_email_from keepalived@example.com #设置邮件的发送地址

smtp_server 127.0.0.1 #设置smtp server地址

smtp_connect_timeout 30 #设置连接smtp server的超时时间

router_id {{ROUTEID}} #表示运行keepalived服务器的一个标识。发邮件时显示在邮件主题的信息

}

vrrp_instance haproxy_ha {

state {{STATEID}} #指定keepalived的角色

interface eth0 #指定HA监测网络的接口

virtual_router_id 36 #虚拟路由标识,这个标识是一个数字,同一个vrrp实例使用唯一的标识。即同一vrrp_instance下,MASTER和BACKUP必须是一致的

priority {{PRIORITYID}} #优先级,数字越大,优先级越高。在同一个vrrp_instance下,MASTER的优先级必须大于BACKUP的优先级

advert_int 1

authentication { #设置验证类型和密码

auth_type PASS #设置验证类型,主要有PASS和AH两种

auth_pass 1111 #设置验证密码,在同一个vrrp_instance下,MASTER与BACKUP必须使用相同的密码才能正常通信

}

virtual_ipaddress {

192.168.56.21 #设置虚拟IP地址,可以设置多个虚拟IP地址,每行一个

}

}

[root@linux-node1 /srv/salt/prod/cluster]# cat haproxy-outside-keepalived.sls #将keepalived与haproxy整合

include:

- modules.keepalived.install

keepalived-server:

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://cluster/files/haproxy-outside-keepalived.conf

- mode: 644

- user: root

- group: root

- template: jinja

{% if grains['fqdn'] == 'linux-node1.example.com' %} #通过grains动态获取主机ipv4地址

- ROUTEID: haproxy_ha

- STATEID: MASTER #定义keepalived角色

- PRIORITYID: 150 #定义优先级

{% elif grains['fqdn'] == 'linux-node2.example.com' %}

- ROUTEID: haproxy_ha

- STATEID: BACKUP

- PRIORITYID: 100

{% endif %}

service.running:

- name: keepalived

- enable: True

- watch:

- file: keepalived-server

[root@linux-node1 /srv/salt/prod/cluster/files]# cat /srv/salt/base/top.sls #修改top file文件

base:

'*':

- init.init

prod:

'linux-node*':

- cluster.haproxy-outside

- cluster.haproxy-outside-keepalived

[root@linux-node1 /srv/salt/prod/cluster]# salt '*' state.highstate #执行

[root@linux-node1 /srv/salt/prod/cluster]# ip ad li | awk -F "[ :]+" 'NR==11{print $3}'

192.168.56.21/32

[root@linux-node2 ~]# ip ad li | awk -F "[ :]+" 'NR==9{print $3}'

192.168.56.12/24 #vip在node1上,不在node2上,把node1上的keepalive关闭看vip会不会飘移

[root@linux-node1 /srv/salt/prod/cluster]# /etc/init.d/keepalived stop

Stopping keepalived (via systemctl):

[root@linux-node2 ~]# ip ad li | awk -F "[ :]+" 'NR==11{print $3}'

192.168.56.21/32 #vip漂移到了node2上,重新启动keepalived vip又会漂移到node1,配置成功

1 评论